- Judge if it is in range of a User Unit

- Will check their health and inventory, to find out if they need healing, and have a health vial to do so

- Will flee from User Units if they are in range of them, in the opposite direction so they are one tile out of range

Before reaching this point, I discovered and amended a few bugs:

Current Unit Index

I have been using an int, currentUnitIndex, to cycle through units in the units list. If the index hits the units.Count, it is reset to 0, starting the cycle again. I discovered that when a unit died, and it went to their turn, this was throwing off the index, throwing back errors. Thankfully, I managed to come up with a simple solution to this. In the gameManager Update method, I added a single if statement:

if (currentUnitIndex >= units.Count)

{

currentUnitIndex = 0;

pathfinding.target.transform.position = units[currentUnitIndex].currentTile._pos;

pathfinding.FindPath();

}

This means, if the Index attempts to go over the count, it will automatically be knocked down to 0, and the pathfinding target will be reset to the current units current tile position.

Movement Issues

Having thought I had handled all movement issues, I soon realised that I hadn't, when I could move the first user unit, but the second would not draw a path. I realised that the path target was not resetting, and the path itself was not being drawn. I handled this by adding a single line to my Move() method:

public void Move()

{

if (units[currentUnitIndex].moving == false)

{

units[currentUnitIndex].moving = true;

units[currentUnitIndex].attacking = false;

}

else

{

units[currentUnitIndex].moving = false;

units[currentUnitIndex].attacking = false;

pathfinding.target.transform.position = units[currentUnitIndex].currentTile._pos;

}

}

By adding in the bold line above, it meant that the path target would be reset, and would start to find the path correctly once the mouse starts to move over tiles.

AI Implementation

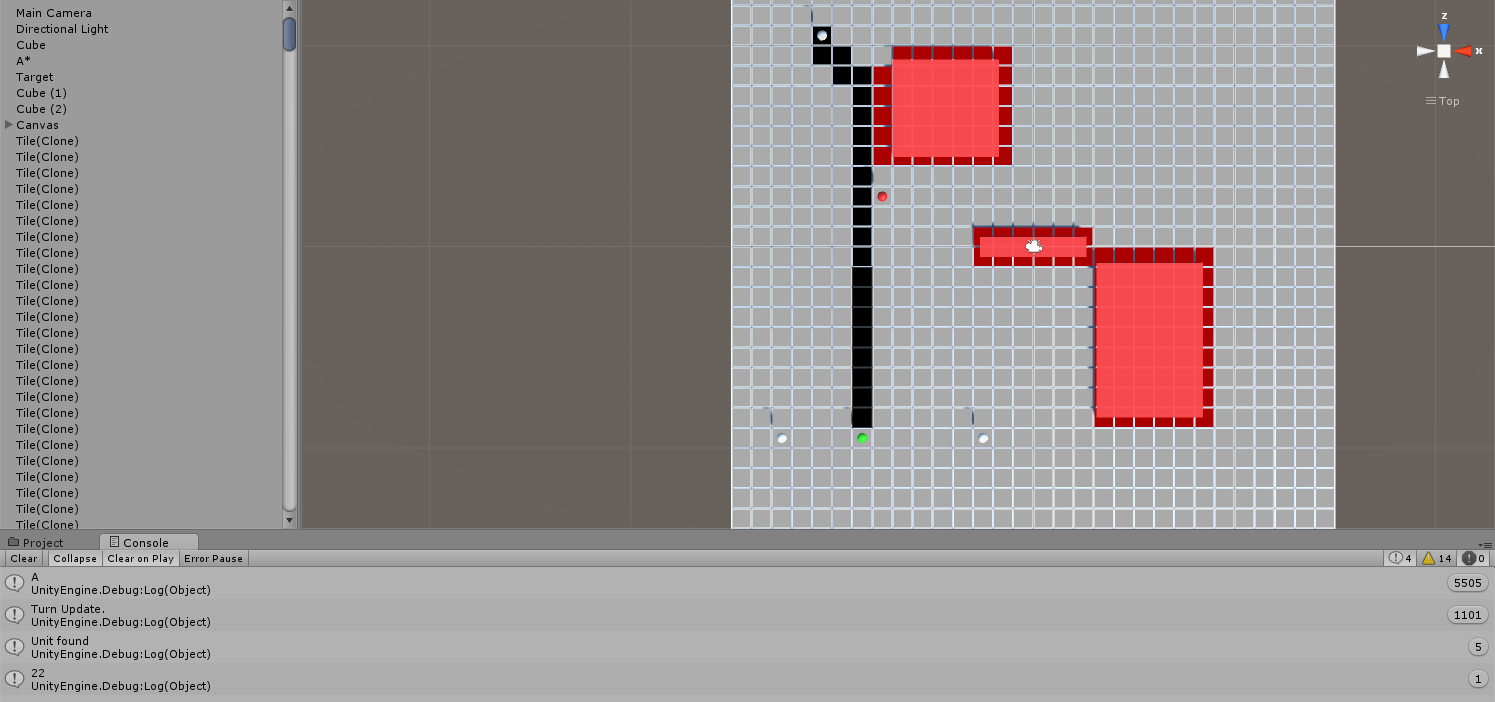

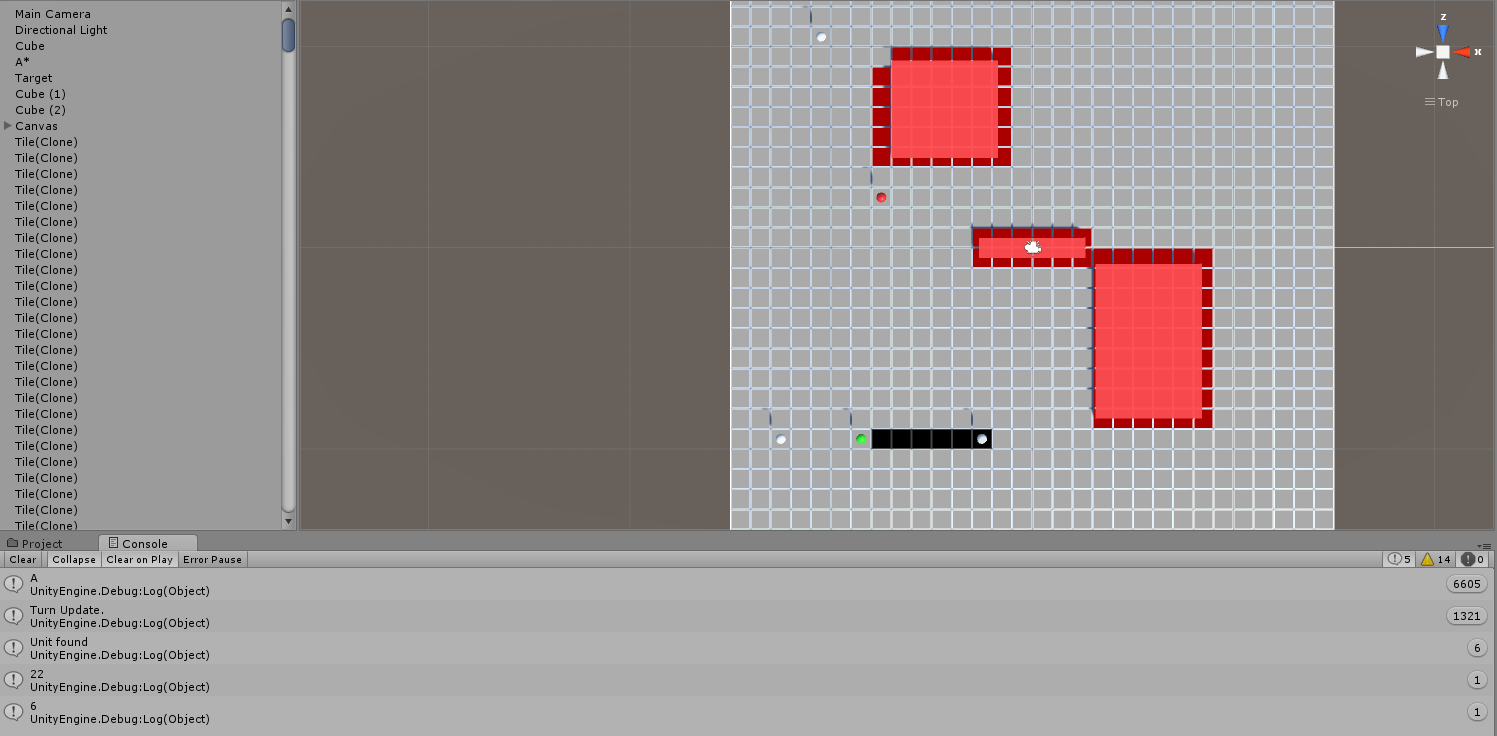

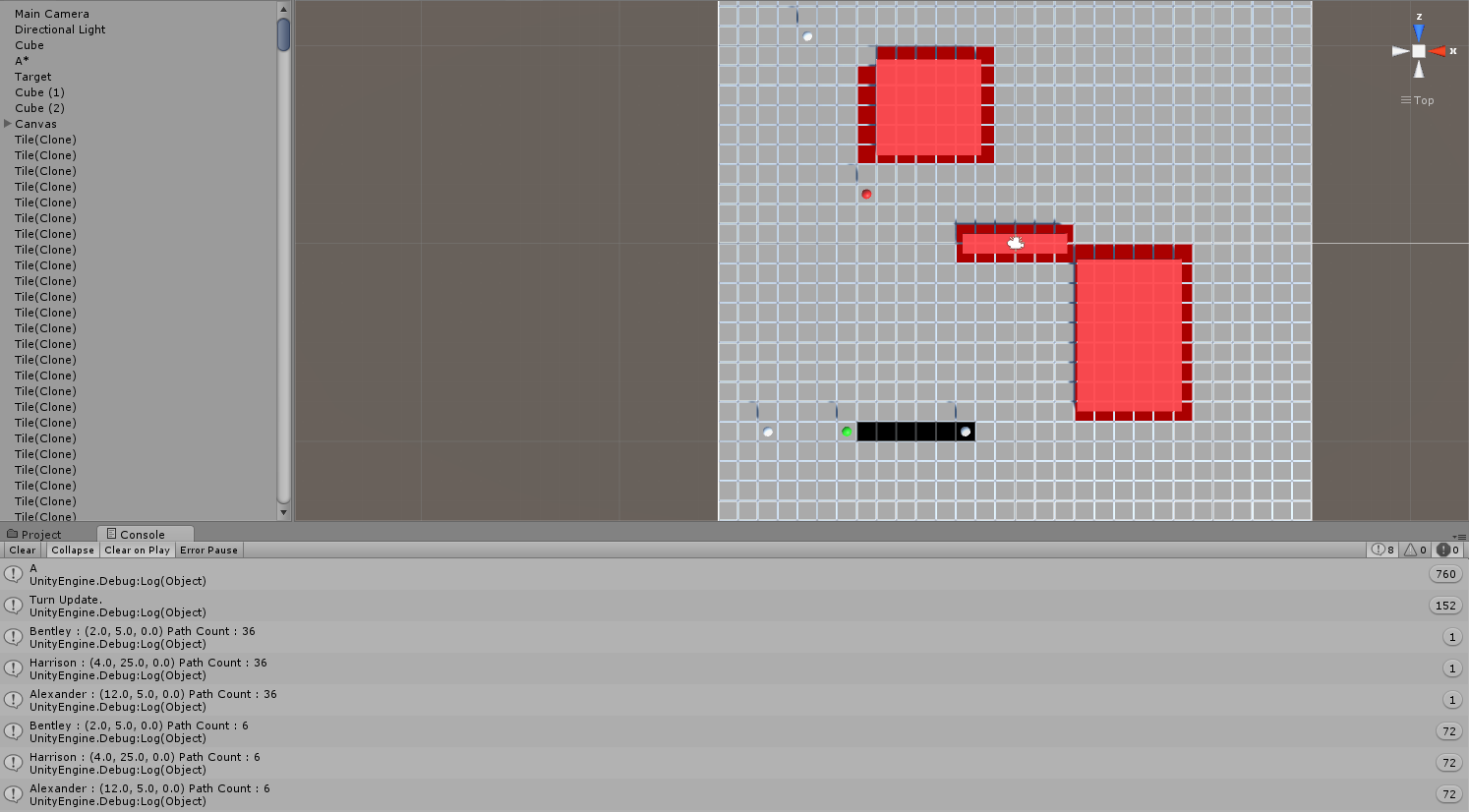

Having tackled the two above bugs on the previous Friday, Saturday I started with the actual implementation again, adding the functions listed at top. Once I had implemented these features, I decided to try out a couple potential actions the AI could perform, leading to this:

|

| When Enemy A attacks, Enemy B attacks too |

|

| If Enemy A retreats, Enemy B's movement failed |

We discussed what I had done, and I demonstrated the issue. Upon debugging the moveCurrentPlayer co-routine, we discovered that, instead of following the path tile at a time, as intended, it was making large jumps within the path, moving One square, then Two at once, then Three, coming to a stop there. (In this case, two tiles away from the User Unit.)

I had set up the move method as an IEnumerator co-routine, to make sure of yield. This is what allowed units to "walk" from tile to tile. It had led to some issues previously, and I had realised at an earlier point in the project that this may cause issues further down the road, as I consider this method rather cheap and dirty.

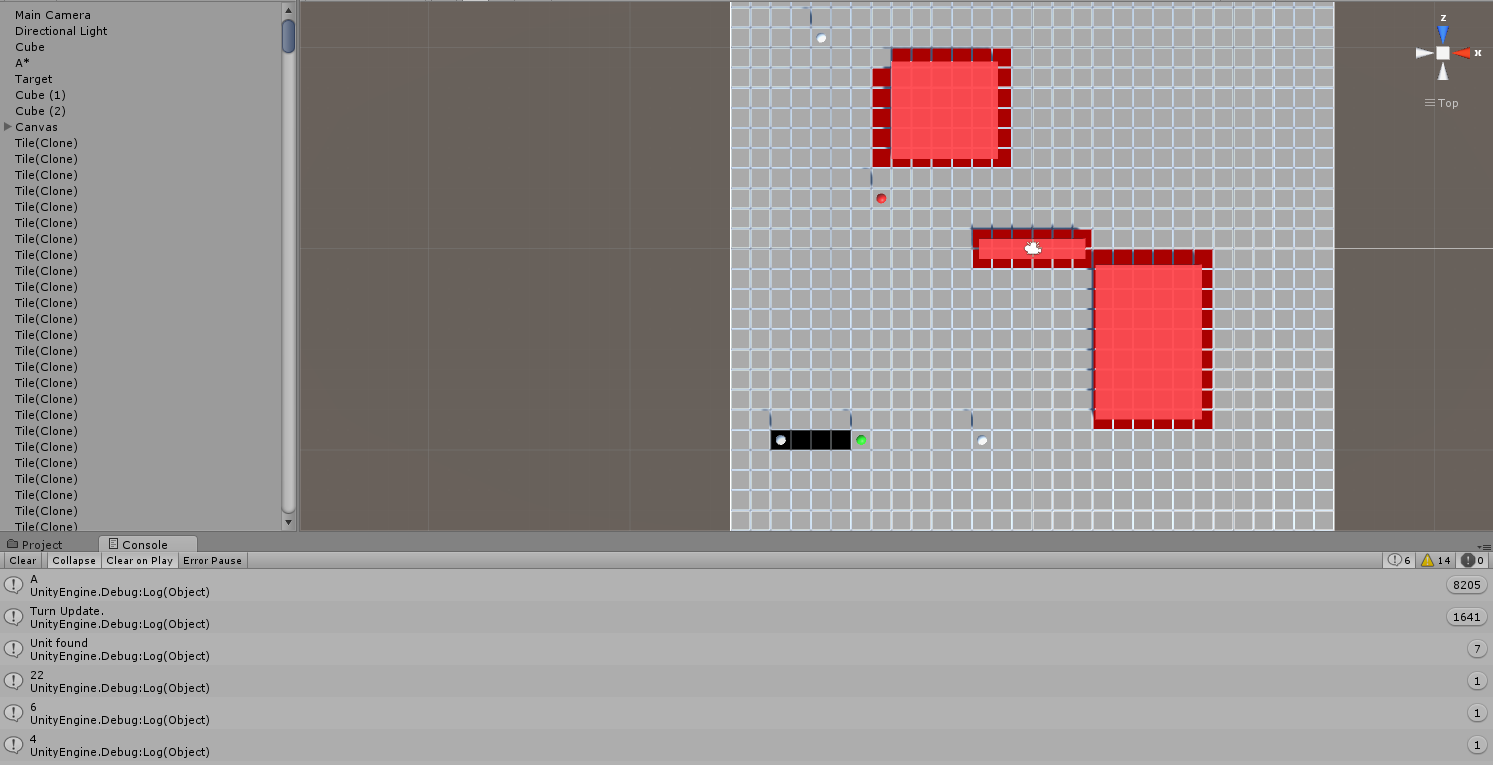

When the IEnumerator was changed to a standard void method, with the yield removed, while units would teleport to the destination tile, using the above scenario again, Enemy B would successfully move and attack. My lecturer deduced that it may have been the yield causing the issues.

For now, I have changed it permanently to a void method. I intend to finish the rest of the AI, and attempt to implement the Unique Support Mechanic before the project is finished, with my AI being made a priority. Should time allow, I will then attempt to add the visual movement back into the project, using a cleaner method.

I realise now that I did take a risk using the IEnumerator; it was the quickest option at the time, and did what was required, but had I put extra time into creating my own movement system, or using one based around Time, it could have saved me time at this point in the project, but oppositely, I could have got stuck in working that out, which could have possibly delayed me further, but regardless, has made me consider risk/reward situations in coding to a greater extent in the future, and is something I will have to start implementing in my work ethics.

Below you can find all code relating to the project up to the 25th of April, 2016.